With the upcoming 2020 elections and increasingly heated political discussion, the threat of social media manipulation and election meddling employed by external actors is fast becoming a reality. more and more tangible. Indeed, social media platforms can be abused by applying automation, impersonation, and deliberate false information dissemination in order to alter organic socio-political discussion processes.

At the same time, the culturally contradictory and crucially sensitive nature of these malicious activities often results in a shift from the threat discussion per se towards political statements and social disputes. These disputes focus on nation-state actors and government-lead threat actor teams such as the Internet Research Agency, while the massive and extremely developed infrastructure of cybercrime and for-profit private threat actor groups often remains out of the discussion.

In this joint research by AdvIntel with the NatSec commentary by Nine Mile Security Group (NMSG), we focus on the non-state, “shadow�? side of political meddling. We investigate the Tactics, Techniques, and Procedures (TTPs), infrastructure, and methods used by foreign, non-politically motivated threat actors who are willing to participate in illicit activities aimed to impact the US political environment.

Policy, Privacy, and Social Media (by Nine Mile Security Group)

The 2008 Presidential Election unlike any other before it contributed to the meteoric rise of social media being used for targeting, advertising and in some cases misrepresentation of information to United States citizens. Set against a backdrop of noticeable social and cultural changes that have either widened or highlighted long-standing divides, hackers, cyber-criminals and everyone else in between have raced to occupy the void with agendas of their own.

Longstanding and relatively safe topics like Education and to some extent Immigration have become both wedge and legitimate security issues. Whether the debate centers on assistance to low-income, inner-city-residing minorities, public loan forgiveness to borrowers often part of the same community, or pathways to citizenship to those seeking American opportunity, what has been clear is that the dissatisfaction of voters across the board for whom these issues are critically important has been weaponized and used to manufacture and often times provoke discord by bad actors.

The massive collection efforts by government and big business alike of innumerable data points have as of late appeared in starker relief to the general public. And now, data, the most valuable commodity on earth has also proven to be the least understood. According to a survey conducted by research firm IPSOS, sixty-six percent of people knew very little about how much data companies (and by extension hackers, cyber-criminals who later pilfer said data) held about them, or what was done with it. Additionally, only about thirty-three percent had a fair amount of trust in companies and the government using it in the right way. These statistics are part and parcel of what provides fertile ground for the social media-based disinformation campaigns cultivated and levied by bad actors for nefarious purposes.

For instance, the ‘social media trap’ set by foreign agencies has been met with widespread success in recent years. It essential is a mechanism by which people get trapped based on some action taken; likes and comments on their post by unknown people. If aimed at a military target, crucial information about logistics, operational details, training institutions, details of weapon ammunition, troop movements and other vital information is collected.

Politics, Business, and National Security

Data can be harvested from a cornucopia of sources for both political and business use. Some of the most well-known being from, web-browsers, ride-sharing platforms, peer-to-peer funds transfer apps, or the dark web itself. With a dearth of federal regulation that uniformly and explicitly addresses how political campaigns or businesses writ large ought to collect, use, and share data, there are little to no safeguards that effectively stymie and/or prevent the targeting and subsequent exploitation of the public for ill-suited purposes by bad actors.

Consider for a moment that during the 2016 Presidential Election (and for 2020) employees from top social media companies were embedded in both Republican and Democratic campaigns as “digital strategists�?. While this in and of itself is one hundred percent part of our democratic process, it also to some extent widens one particular divide; specific tools used to create highly targeted ads sent via social media to an ideal voter or bloc of voters.

Sound familiar? Well, that is because while for completely opposite purposes, bad actors employ the very same tactics with the intent to further fracture an already polarized public.

Cyber-threats, nefarious activity, and attacks are top of mind within the business community. In a report where over one thousand businesses from Latin America, the Caribbean, Europe, United States, Canada, Asia Pacific, and Africa were surveyed on the security climate as part of a 2019 “Global Cyber Risk Perception�? survey, these were some key takeaways:

Seventy-nine percent view cyber-attacks as a top-five priority

Only 17% of executive leaders and board members spent more than a few days focusing on cyber risk

Top two attack zones: Cloud Computing and IoT Devices

So, what does this all mean for the future of our collective security? Well, where you stand largely determines where you sit, but these few things are for sure:

The sheer financial cost of cybercrime is already in the billions of dollars – and rises year over year.

We need to understand who our adversaries are, where they’re located, and what their capabilities, plans, and intentions are.

The dividing lines are at best fluid, public debate is fever-pitched, and threat actors are fast gaining ground.

Time is of the essence; we truly need all hands on deck.

Research Methodology: Ethics & Safety First

We believe that it is essential to disclose the key points of the research and the methodology used in this investigation due to the extreme sensitivity of the discussed issue.

In order to build a comprehensive understanding of the threat landscape, Advintel relied on the adversary perspective, meaning that we investigated tactics shared by the actors themselves. We initiated threat actor engagements with over 40 foreign cybercriminals and professional meddlers who emphasized their willingness to play a part in a political manipulation activity. At the core of this group were high-profile threat actors previously tracked by our Subject Matter Experts (SME) team who had a proven record of active participation in elite cybercrime communities.

We declined all offers from threat actors to conduct proof-of-concept/proof-of-value (POC/POV) operations. These operations were offered to be conducted in a non-US social environment; however, AdvIntel believes that disinformation activities even conducted on a minor scale for research purposes are deeply unethical and immoral. Additionally, we declined any offers to create samples POC/POV materials such as political content writing, video creation or any other artifacts which could have been used in an actual manipulation campaign, after our investigation was finished.

Even though the strategies shared by the threat actors emphasized teamwork and tight cooperation between different groups of cybercriminals involved in a campaign, we have not introduced the actors to each other or created any joint chats or spaces in which the investigated actors could have communicated. This was done to avoid creating social capital for threat actors which could be used in future malicious activities.

As our main focus was on an adversary perspective, we relied on actors sharing strategies themselves. As a result, the majority of actors asked for specific details about the political environment in which they would need to operate to develop the most particular and efficient ways of manipulating this environment. We were able to convince the actors to model their approaches based on the most abstract case possible - a non-existent US state with a population of 10-15 million people going through Congressional elections. We have deliberately avoided any modeling based on real US communities, jurisdictions, or locations.

Finally, while designing the investigation we have consulted with security researchers, and academic experts to ensure that even indirect facilitation of any damage to the US social, cultural or political fabric.

Step 1. DarkWeb Politics

To assess the threat landscape we asked a simple question - what will it take for a foreign malicious group to impact the US political environment through illegal means?

To answer this question we went to space which we, as security researchers, knew the best - the DarkWeb and criminal underground. Later in our investigation, we discovered that threat actor participating in meddling would have done the same. In an interview with a source, based in Eastern Europe who claimed to actively participate in defamation and disinformation campaigns during local regional elections, the source argued that approaching the DarkWeb is always their first step. DarkWeb provides an already established infrastructure of established connections between people, an integrated network of specialists who value their reputation. This enables anonymously to hire experts with minimizing the risks of been scammed or detected. Moreover, the networks of escrows and moderators which monitor the deals within the community allow ensuring that the hired actors will not disappear with the money.

To fully utilize the benefits of the DarkWeb trust sharing networks we selected several top-tier cybercrime forums and job sites. These forums were entirely devoted to hacking, carding, financial fraud and other types of cybercrime. Political discussions, hacktivism, and other actions were prohibited by the administrators and moderators, which ideally fit our research goals to test how active and efficient cybercrime syndicates can be when it comes to operations of political nature.

As a result, in less than two weeks and spending $0 we were able to assemble a pool of 40 experienced cyber criminals who had a coherent strategy and were eager to start the operation “the next day.�? Three findings were particularly important about this group.

First, many actors were of an international background. Our engagements were done through Russian-speaking forums, which was defined exclusively by two reasons - this was the environment in which we could have the most accurate threat assessment and the most developed analysis, secondly, these communities are home to the most sophisticated and experienced crime groups and actors.

Therefore, naturally, a large number of actors interviewed were Russian-speakers (which does not suggest that these actors were ethnically Russian or Russian-based). However, we have observed a solid number of non-Russian participants. The actors engaged claimed to be based in China, the MENA region, the EU, South Asia, and Oceania. We assess with a high level of confidence that the international actors are attracted to the infrastructural and network capabilities of Russian-speaking top-tier forums.

Secondly, criminals who demonstrated an interest in meddling activities came from all possible domains of cybercrime. This means that when it comes to cyber-political manipulation the threat environment is extremely malleable - methods used by ransomware groups, carders, and traffic hosters can be creatively weaponized for the sake of intrusive social actions into the US environments.

Third, despite the for-profit nature of forums, some of the actors actually did have political experience. This group primarily focused on blackhat search engine optimization (SEO) and traffic herding to boost illegal content. These actors were arguing that their experience tested within foreign environments can be successfully transferred into a US setting.

Step 2. The Meddling Instruments

As the main investigative strategy was the discovery of the adversarial perspective, the actors themselves were convinced to actively share strategies and visions for the targeted goal. All of these suggestions can be categorized as an adaptation of cybercrime to political needs, specifically, carding and financial fraud, ransomware and network intrusions, and web fraud and traffic manipulations.

Carding, also known as card fraud is one of the major components of the modern cybercrime ecosystem. The carder skills are seemingly distant from politics; however, they can be perfectly adapted for the purposes of political meddling operations.

According to the threat actors, the first thing required to successfully spread disinformation over social media is a system of impersonated accounts that seem authentic while bypassing social media platform algorithms. This process is almost identical to online credit card fraud. When carders make a purchase using a stolen card they should bypass anti-fraud by acting exactly as the cardholder. This sophisticated process requires obfuscation of real digital fingerprints, the use of anti-detect frameworks, and complex social engineering. An experienced carder can operate a stolen US bank account, mimicking its real owner and remain undetected. The same skills can be used to steal an account or create a sockpuppet on social media and successfully operate it to disseminate political content. In addition to this, carders offer to use their existing network of impersonated financial accounts to manage all the payments needed for the meddling operation.

Moreover, just as political campaigns sometimes require physical presence, carding operations have a physical dimension - so-called drop services. Each developed carding enterprise has a team of people who will intercept stolen packages, verify purchases and handling the situation when a physical presence of the impersonated account owner is needed. Drop services already have teams on the ground with an established infrastructure of fake identities, IDs, warehouses, and vehicles. These teams can be used for the physical implementation of disinformation - placement of banners, flyers, cold-calling, or even provocations.

According to the actors, malware and ransomware can be especially useful when it comes to sabotaging activities. Well-organized malware syndicates make a living by breaching into secure networks, including government, defense contractors, financial institutions, and healthcare. Environment belonging to political organizations are not always as protected as some of these groups’ top targets. Syndicates often rely on the best credential stealer crimeware tool which enables them to receive login credentials and access correspondence and financial records.

Targeted breaches into the network may result in correspondence exposure and other crucial reputational damage or fatal business interruption caused by ransomware. Even if the breach team is unable to access the network or will be unable to steal information, they may report a fake breach through top-tier underground forums, while an affiliated social media team will spread the misinformation about the cyber attack.

Finally, web traffic manipulation can easily be used for propaganda purposes. Different methods of search engine manipulation and traffic redirect allows altering the search result order for a targeted request, source, or website. Redirected traffic from obscene sources such as pornography websites can be used to compromise the legitimate web source rate standings, while redirect pages can be used to increase the visibility of provocative content.

Step 3. From Stealing Money to Weaponizing Narratives

To understand the application of cybercrime to propaganda in a more exact way, we have accumulated different strategical designs offered by threat actors. The most efficient solutions emphasized the creation of a work process that will organically embed all different methods in a single campaign, with each methodology amplifying the other. As a result, the final strategic view presumed the organization of the forty engaged actors into four Groups.

Group 1. Political Content Writers. This team would include experienced content writers and social media promotion experts who had previous experience in political meddling. In our pool of actors, we have identified six people from the EU and Eastern Europe who were willing to take these responsibilities and design a political engagement strategy. The most developed versions focused on the following methods centered around active tribalization and alienation of the political process:

Step 1. Identification of dividing content to amplify the feeling of political apathy. The types of content included issues of racial divides, environmental issues, ethnic and confessional divides, immigration, crime rates, police brutality, unemployment, urban-/rural divide, utility pricing, and housing crisis. It is noteworthy that even though the actor did mention racial and religious divides, their main emphasis was made on the socio-economic rather than identity hotspots.

Step 2. Group 1 will identify the already-existing political content produced by radical and extremist proponents of ideologies related to dividing issues. This content will become the foundation of the media campaign, being actively disseminated to disproportionately invade the public discourse with radical ideas, and, this way, delegitimize it.

Step 3. Simultaneously new content is being produced (mostly via basic digital means) which will entirely focus on out-of-context controversial statements made by legitimate politicians, portraying them as a proponent of a certain extremist ideology and exclusive advocates of only a selected voter group. This way the complex and multidimensional political agenda of legitimate candidates will be reduced, exacerbating the social divide, while alienation between voter groups will increase. Special negative targeting will be aimed at moderate, reconciliatory, and universal-values-based political narratives that are designed to address the needs of all voters within the state. The threat actors emphasize that after a certain point, each message will start spreading on its own creating a chain reaction due to an already-high alienation and clusterization of the US society.

Step 4. In an atmosphere of mistrust and apathy, one of the political groups convenient for the meddling organizers is being selected and mobilized. Mobilization efforts are also conducted through social media pages.

These measures are aimed to decrease the voting rate, by spreading perception of the candidates themselves as manipulators serving in the interest of selected social groups and pursue narrow political agendas. With the lower voting rate the chances of the meddling increase due to the lowering of amortization. If the meddlers are interested in a radical agenda to prevail, a small radical but very vocal and mobilized political group that would otherwise receive marginal results (in absolute numbers) may now receive high results in relative percentage numbers, as the overall number of voters is low. In case, the meddlers are on the opposite are interested in a moderate candidate, the erosion of political discourse can compel voters to align with the most rational candidate during the overall panic.

As we can see this approach bears similarities to the one attributed to the Internet Research Agency, however, it has a more coherent and logical inner structure. Whereas the evidence available regarding the Agency’s operations suggest that the troll farm was massively and relatively randomly spreading provocative messaging to different segments of the US society, the paradigm taken by the for-profit threat actors bears a more pragmatic consistent approach. In their strategy, all the steps are tightly connected one with another and all serve to achieve a clear final goal - the benefits of a selected political agenda. This is most likely related to the fact that the main experience of these actors was promotion and advertisement (mostly of illicit content and commodities) and they approach political meddling as a marketing campaign.

It is noteworthy that fake news per se are not widely mentioned by the actors, as direct disinformation is found to be inefficient since it triggers account bans. Instead, they prefer to focus on the content which does not directly misinform the audience, by rather shift the emphasis in a disproportional way, facilitating the erosion of political culture and minimizing the opportunity for a discussion or a search for common ground emerging between opposite political views.

Group 2. Promoters

This group takes the content identified or created by Group 1 and aggressively disseminates it. The actors are primarily individuals engaged in an advertisement for illicit goods and traffic herding.

To understand the process, we conducted an interview with an employee of an advertising agency based in Eastern Europe which is engaged in the promotion of commodities that are either banned, counterfeit or illegal. Just as advertising a counterfeit drug is essentially advertising of a legitimate drug but with advanced security measures to avoid bans and moderations, the promotion of radical and marginalized political content is the same as the promotion of legitimate content but with more caution.

In the advertisement agency in which the interviewed worked, the main approach was quantity based. The agency employees and contractors based in Eastern Europe, the Middle East, South Asia, and Oceania, hired traffic herders and simultaneously kept posting numerous messages daily. Thousands of cold-calls were made to support the promotion. According to the interviewed, the agency tried to actively integrate robocalling, automation, bots, and even automatically generated content-writing tools, but the human-based approach proved to be the most efficient. Automated tools were often detected by the social media platform moderators (the agency focused on Facebook, Twitter, and Instagram) which decreased the cost-efficiency. According to the interviewed, with the right experience and human-based approach, it is still possible to significantly promote dubious content by reposting and manual message dissemination.

A similar view of avoiding automation and bots and prioritizing the customized solutions offered by high-profile web fraud specialists. Through the course of the investigation, we have engaged with a web fraud professional, a Russian-speaking hacker “Gantz�? (alias obfuscated for security purposes). Their threat credibility was proven in 2019 when this actor offered access to hundreds of internal networks, FTP servers, and domain controllers of multiple US-based companies for an initial price of $50.000 USD. The compromised targets included:

A major Philadelphia web-design company

US financial and operational advisory firm

US private investment partnership

US medical software provider

Pennsylvania school district

Two healthcare companies

Major email marketing software provider

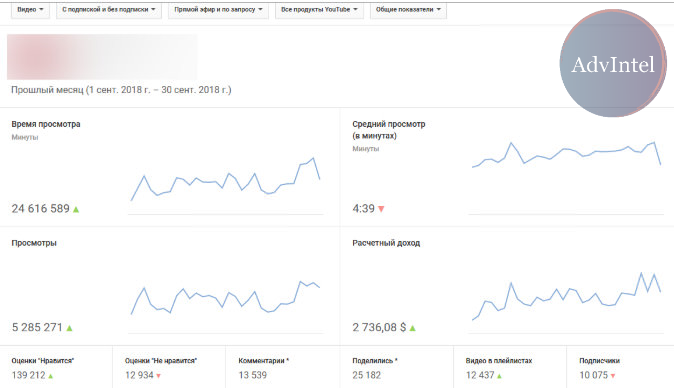

Even though Gantz is an experienced hacker their main skill is YouTube and Google search engine fraud; they claim to be in business for over 10 years. Through this time they allegedly used the YouTube platform to advertise services such as online gambling or goods which were banned in a certain region. Their campaign promotion strategy was simple - increasing the search engine and YouTube visibility into content which will otherwise be marginalized or banned by moderators or have low search result ranking.

Gantz promotes content by sharing the video as a YouTube pre-roll ads via Google AdWords. They use Google AdWords feature of selecting a targeted audience based on multiple parameters which makes the promotion of political content much more efficient. Gantz approach is based on the fact that when a video violating content agreements receives a complaint it will be forwarded to moderators but it may take days until the actual video deletion. Therefore, a radical divisive content should be shown to the audience from multiple accounts in a short time frame of 24 - 48 hours. With the Google AdWords capabilities, it is possible to share content with millions of users in this time window (the case study targeted audience was 10 - 15 million)

However, to implement this seemingly simple strategy, a large budget, anonymity, and anti-fraud systems avoidance are required. To solve these issues, Gantz offers its carding skills and hacker experience. As a carder, they already have the entire infrastructure which enables them to maintain authentic digital fingerprint, including anti-detect frameworks, VPNs, virtual machines, etc, all calibrated and meticulously configured after years of impersonating operations used for card fraud. (For more information on impersonation via compromising accounts, please see our research on Enroll Services)

Moreover, as any experienced carder Gantz has access to trusted DarkWeb suppliers who can offer accesses and credentials for compromised accounts and online banking pages of US citizens which will be used for payments or, for registering Google accounts. Moreover, Gantz abuses the Google account feature which allows having a negative balance, i.e. keep advertising even if there are no funds. As with content violations, Gantz uses the time frame, in worse case scenarios as it may take days for Google investigators to suspend the account with a high negative balance.

Carders spend years honing their skills in bypassing bank anti-fraud systems, the same skill is used to maintain the digital authenticity of the stolen Google accounts and ensure they will be able to survive as long as possible. Finally, digital impersonation facilitates hiding the evidence that the meddling was committed by foreign actors or dissuade the investigation. Gantz suggests using Iranian and Chinese digital fingerprints to create allegations for a nation-state activity.

Currently, Gantz claims to maintain access to 12 legitimate Google accounts of US users and can create numerous more by using the stolen financial and credential data. The 12 accounts can accumulate the advertising placement for around ten million views, which is the case study targeted audience. With the use of stolen money and obfuscation of payments that Gantz can receive from the potential meddling organizers, they will be able to obtain a percentage of the profit from pre-rolled ads that they push. After calculating all the expenses and potential profits, the actor requested $87,000 USD for an advertisement which would cover this audience with a focus on 5-7 second videos which is a manageable amount for a foreign group planning such a sophisticated intrusion as election meddling.

To make matters worse, we observed other for-profit YouTube fraudsters using tactics that can drastically amplify the social divine. For instance, one YouTube scheme which is increasing its popularity in 2019 named among the Russian-speaking cybercrime community as “Grey YouTube Channels�? instructs to create replicas of authentic YouTube channel to gain views monetized via Google AdWords. What is especially concerning is the fact that these fraudsters recommend creating YouTube replicas of politically-divisive channels as this may yield many views. For instance, one of the leading GreyChanel fraudsters operating under an alias “FrankyRich�? suggests replicating videos related to racial and ethnic tensions which will naturally give additional voices and promotion to radical political groups and leaders.

Group 3. Intruders & Hackers

The main purpose of this team would be direct cyber espionage and sabotage. For these actors, the political aspect of the case is the least important, as they are given a target that will become the victim of the attack. Due to the political nature of the potential targets in our case study (the political aspect was not disclosed to the actors) we have focused on groups and actors who had previously proven success in attacking political and government entities in the West.

The groups and actors who confirmed their commitment to targeted attacks:

“Achilles�? an Iranian (likely) threat actor who was responsible for the 2018 Austal defense shipbuilder breach.

“Amiak�? Group, a Russian-speaking ransomware syndicate who claims been able to breach into the LAPD databases.

“Vestl�?, a Russian-speaking hacker who offered access to a US police station and was able to provide evidence of this breach.

For all these actors, the requested price range for one breach and uploading ransomware was around only $5,000 USD.

Among the network intruders engaged through this investigation, one stood out by their skill and profile. A Russian-speaking hacker “b.wanted�? who offered access to a Virginia-based defense contractor providing managerial, technical, and cyber solutions to numerous US state entities, including military and security departments. AdvIntel notified the US law enforcement about the threat.

b.wanted is a high-profile actor with a solid reputation on forums. Moreover, based on the linguistic, behavioral, and operational analysis, including the language and style of their offers and the way b.wanted communicate with potential buyers, it is likely that they are connected to the hacker collective FXMSP, which was responsible for an attempt of breaching US antivirus companies in May 2019. For instance, b.wanted relies on a multi-layer set of access backups, which includes botnet infection, access via credentials, access to the domain controller, and access via Remote Desktop Protocol (RDP). These four types of accesses each secure the other - in case one method is being compromised, the other will still maintain, the actor’s visibility into the victim’s network. This resilience-building was actively used by the FXMSP team.

b.wanted have offered multiple high profile breaches, including US-based healthcare and government entities. and attracted broad attention of the community. Most importantly, they were noticed by one of the leading ransomware professionals “Kerberos�? closely affiliated with the REvil ransomware group.

The defense contractor breach is likely the most valuable item, which b.wanted currently have on the list. According to the intruder, they have access to 100 workstations within the victim’s network, through which it will be possible to perform intrusion into the government clients’ datasets. They specified that upon request they can use RDP access to either upload customer’s malware, or access secure databases, based on customer's preference. In addition to the RDP, they had second access obtained through a botnet with active bots set within the victim’s environment. The botnet kept gathering credentials from within the victim’s system through which, the actor was able to identify credentials which belonged to the contractor’s federal government customers. Finally, b.wanted backed up access by breaching into the system domain controller.

The willingness of such actors to participate in a meddling operation can enable the meddling group to use their advanced capabilities in orchestrating an attack against a political group or a candidate in order to obtain social information or correspondence or disable their digital infrastructure by ransomware.

Group 4. Physical Operations

This group consists of actors who offered to coordinate individuals who will be physically present in the US during the campaign. Physical operations for this case can be conducted by drops, also known as mules.

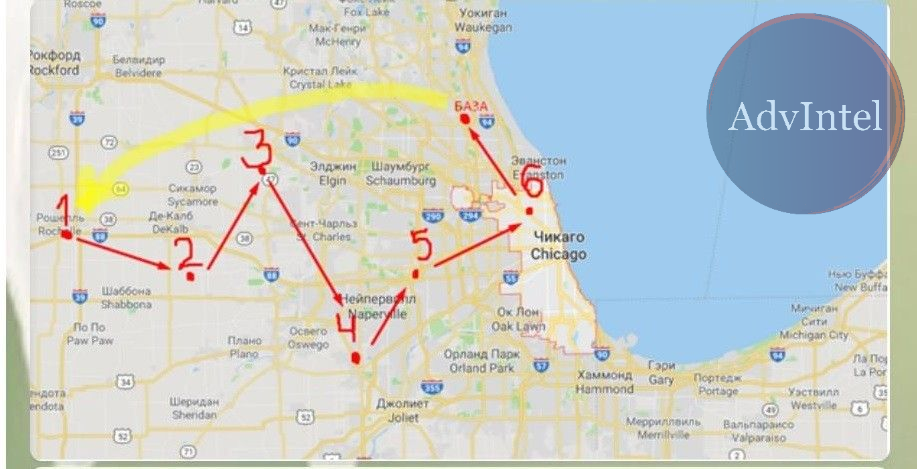

Drops are essentially the human infrastructure of cybercrime and their services can be utilized for political purposes as well. Eastern European cyber syndicates are among criminal groups establish fencing networks that connect different types of crime, from smuggling and counterfeit to establishing fictitious entities, impersonation, and identity theft. To understand how these networks may contribute to the meddling cause we have engaged with one of the oldest fencing syndicates operating since at least 2010 the Gershuni Syndicate (alias obfuscated for security purposes).

The group has been operating in the New York City and Chicago areas with the main income was generated through the massive purchase of items via stolen payment cards, and reshipping these items into the Former Soviet Union, or reselling them within the US. According to AdvIntel’s assessment of partial evidence, shared by the syndicate members in telegram channels, the group turnover, may have been reaching up to $400,000 USD per month.

A political manipulation campaign requires numerous on-the-ground actions from posting banners and handing flyers to setting provocation during rallies, organizing these rallies, or even destroying an opponent’s property or committing fake physical attacks to frame a certain group.

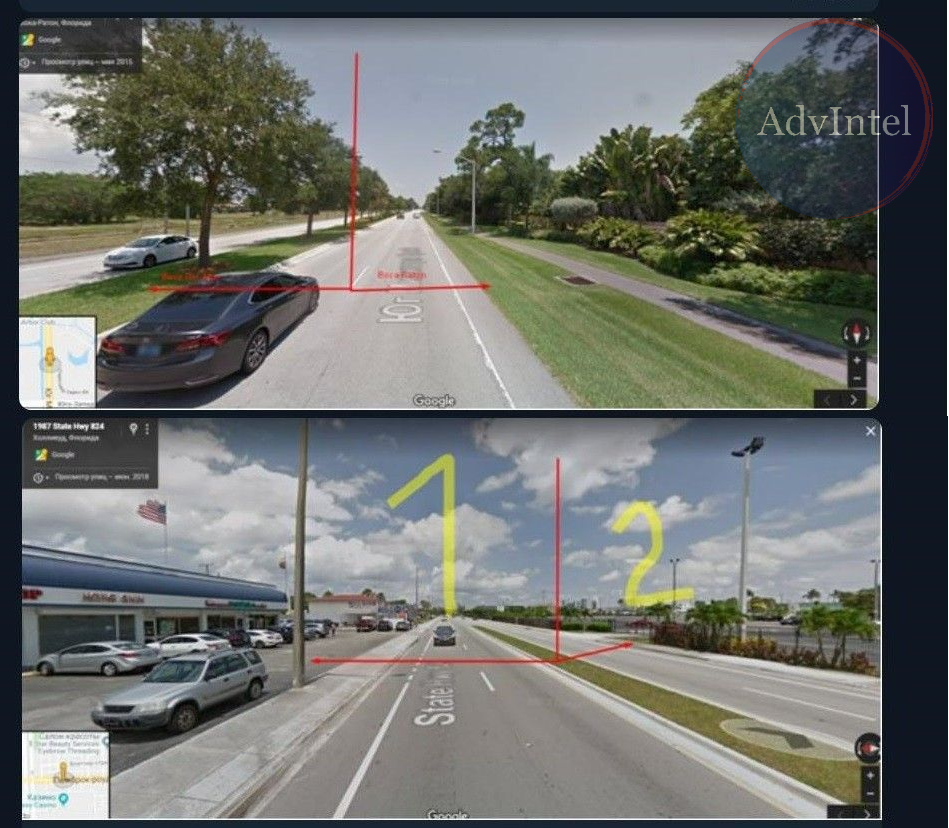

Mule networks, including the Gershuni, provides all the in-place logistics, including printers and laminators for fake IDs and promotion materials (each mule network uses expensive printers to print fake credit cards and IDs), warehouses, and vehicles as well as managers to coordinate the logistics. Moreover, mule services know how to maintain a strict security protocol, including checking surveillance devices and registering a company with the US as a cover for operations: (car repairmen, dry cleaning, car wash). The Gershuni group offered the following infrastructure for creating an efficient criminal enterprise:

Legalization: fictitious or cover enterprises, legal businesses, etc

Full-time staff

Freight cars and trucks

Warehouses

Transportation, living, and renting costs of moving into the US

Excellent knowledge of English

Patience in waiting for several months of low revenues, while the service is establishing its niche in the market

The operational logistics of a drop service includes skills essential for an illicit political operation as well. Drop operatives are well-accustomed to high-stress and high-risk environments. They are trained to deal with US law enforcement. According to Gershuni representative, carding syndicates in the US are very resilient networks, due to the decentralization of the nation's police force. Even if one of the operatives is caught in a certain district, the local police department will most likely not have capabilities to track down the entire network scattered across the state and based in jurisdictions of other departments.

The knowledge of district jurisdiction is a foundation for choosing locations, routes, shipping points, etc. According to the lecturer's personal experience with US law enforcement, each time, one of the drops was caught, the local police were unable to initiate a full-scale investigation and usually stopped pursuing the network after catching one operative. For this reason, a warehouse is always in a different district than all of the operations. The only exception is the high-way state troopers, therefore, it is crucial to always abide traffic rules and be proficient in spotting undercover trooper cars during the ride.

Scenario Example

We begin the investigation by engaging with individuals who claimed to have previous experience in regional election meddling. They prioritized strategies that will combine all the cybercrime capabilities which a foreign politically-motivated group may canvass for its goals. This is an example of the campaign scenario developed by these professional meddlers.

Group 4, US-based mules stage a provocative video of a physical attack on an individual attributed to a selected social group.

Group 2, promoters the video on YouTube adding a short comment aimed at exacerbating the hostility toward the selected social group. The comment is readable through the first five seconds.

Group 2 actively popularizes videos which are a reaction to the initial one, using the same methods.

Group 1, the political content experts, post comments on other social media, with the use of sockpuppet accounts created by carders from Group 2 and 3, guiding the discourse.

Group 3, hackers infiltrate the networks of a legitimate candidate and published the correspondence segments which places this candidate on one side of the already originating debate.

Group 4, places provocative banners related to the debate, to further aggravate it.

Group 2 and 3 actively use the accounts with a digital fingerprint of a foreign country. The accounts are used to promote one of the legitimate politicians. Group 2 publishes a pre-set investigation suggesting that the politician is supported by foreign power.

Conclusion:

Cybercrime and political manipulation can organically correlate, moreover, cybercrime serves as an enabler for meddling. For-profit actors, especially financial fraudsters efficiently adopt proven methods of digital impersonation to wage disinformation campaigns, while malware experts may demonstrate a commitment to performing targeted attacks on political targets. By offering these services, cybercrime significantly drops the entry barriers for political manipulation. The underground criminal infrastructures are easily accessible, and it will not require a malicious actor to have a nation-state or an APT capability to use these services to achieve a broad efficient political impact.

Cybercrime makes political operations significantly more cost-efficient. DarkWeb and criminal underground can be successfully utilized to conduct a meddling campaign assembling a team in only two weeks. The overall budgets of such a campaign are relatively affordable. For our case study of a 10-15 million state, we calculated that Group 1 would require a budget of $7,000 USD for content writing, Group 2 will take $90,000 USD for promotion, Group 3 charges a $5,000 USD per breach, while setting a mule network will cost around $15,000 USD. Considering the fact that the services of Group 3 and Group 4 are supplementary, the overall budget will be below $100,000 USD.

Cybercrime amplifies the capabilities of meddling campaigns by involving criminals of international background and pragmatic balanced approach. Even threat actors operating on Russian-language forums, who are interested in meddling activities bring political insights from their operational environments of the EU, MENA, and Asia. These actors prefer to rely on balanced human-based approaches and not on automation and bot creation. They tend to avoid direct misinformation and fake news to the divisive interpretation of political and social events. By organically combining different methodologies these groups develop consistent pragmatic approaches that may be more efficient than the unilateral strategies implied by politically-driven nation-state groups.